Artificial intelligence is revolutionizing healthcare, entertainment, and even companionship—but not all AI is used for good. In 2025, scammers are now weaponizing AI to trick, confuse, and steal from older adults with disturbing speed and sophistication.

This guide will help you spot and stop AI-powered scams targeting seniors. Whether it’s a deepfake phone call or a fake AI chatbot, knowing the signs and setting up protections can keep you—and your loved ones—safe.

Why AI Scams Are on the Rise in 2025

AI is now advanced enough to:

- Clone voices with 10 seconds of audio

- Generate fake video calls that look real

- Mimic the writing style of loved ones

- Send realistic chatbot messages that impersonate banks, Medicare, or family members

And older adults are being specifically targeted because scammers assume they’re:

- Less familiar with AI or deepfake technology

- More trusting of familiar names or voices

- Financially stable or managing retirement funds

According to the FTC, seniors lost over $3.8 billion to fraud in 2024, with AI scams emerging as the fastest-growing threat.

Common AI Scams Targeting Seniors

1. Voice Cloning Scams (The “Grandparent Scam” 2.0)

How it works:

You receive a call from someone who sounds exactly like your child or grandchild, asking for urgent help with bail, hospital bills, or travel. It’s actually an AI voice clone created from publicly available recordings or social media.

How to protect yourself:

- Hang up and call the real person directly

- Set up a family “safe word” for real emergencies

- Avoid sharing your voice in public posts (like Facebook comments or TikTok videos)

2. Deepfake Video Calls

How it works:

Scammers use AI to create video calls that show a loved one’s face and voice—but the person on screen isn’t real. These are used to request money, gift cards, or banking access.

How to protect yourself:

- Ask the caller to do something random on camera (e.g., raise their hand or say a code word)

- End the call and reach out to your family through trusted contact methods

- Be skeptical of any video call with emergency demands for money

3. AI Chatbots Pretending to Be Customer Service

How it works:

You search for tech help or Medicare support online and click a fake site. An AI chatbot appears, offering to “help”—then asks for login info or payment details.

How to protect yourself:

- Always check the website’s URL (look for .gov or official company sites)

- Never share passwords or full Social Security numbers through a chatbot

- Use trusted links only, such as Medicare.gov or SSA.gov

4. Romance Scams Powered by AI

How it works:

You meet someone online who seems perfect. They send thoughtful messages, remember details, and say all the right things. But it’s an AI bot trained to build emotional trust—and eventually request money.

How to protect yourself:

- Be wary of overly flattering or fast-moving relationships

- Use video chats early to confirm the person is real

- Reverse-image search profile photos to check for fakes

5. Fake AI Investment Advice

How it works:

Scammers create fake AI “retirement bots” promising guaranteed returns through crypto or AI trading platforms. These often mimic legitimate websites and lure seniors into sending money or personal data.

How to protect yourself:

- Don’t trust ads with “guaranteed ROI” or “AI-powered retirement plans”

- Verify platforms through Investor.gov

- Consult with a licensed financial advisor before making any investments

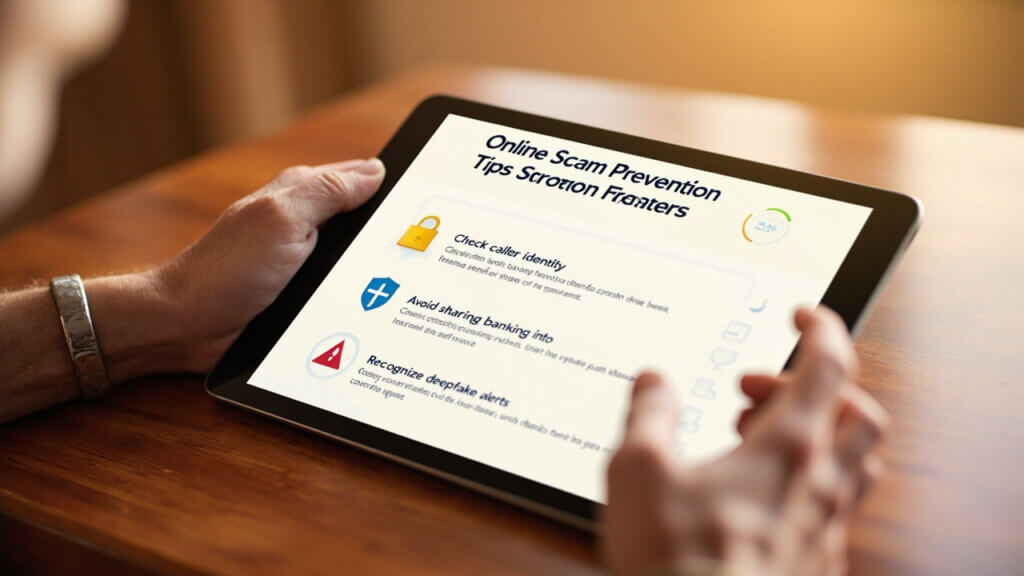

7 Practical Ways to Protect Yourself from AI Scams

1. Use a Password Manager

Avoid using the same passwords on multiple sites. Use a password manager to create strong, unique passwords that are hard for AI bots to crack.

2. Enable Two-Factor Authentication

Require a second step—like a text code or fingerprint—to log in to your important accounts.

3. Update Devices Regularly

Keep your phone, tablet, and computer updated with the latest security patches.

4. Be Skeptical of Urgency

AI scams often pressure you to act fast. If something feels rushed, pause and verify.

5. Avoid Sharing Personal Info Online

Even something as simple as your voice or birthday in a Facebook comment can be used to train AI against you.

6. Talk About AI Scams With Family

Let your children or grandchildren know about “voice cloning” scams so they don’t unintentionally post content that could be used against them—or you.

7. Use Verified, Trusted Websites

Bookmark official links like:

FAQs About AI Scams and Senior Safety

Can someone really clone my voice that easily?

Yes. In 2025, AI can mimic a person’s voice with as little as 10 seconds of audio—often pulled from voicemails, podcasts, or public videos.

How can I tell if a video call is fake?

Watch for delayed reactions, blurry mouth movements, or generic backgrounds. Ask the caller to do something unexpected to verify they’re real.

What should I do if I was scammed?

- Contact your bank immediately

- Report the scam at ReportFraud.ftc.gov

- File an identity theft report at IdentityTheft.gov

- Let a trusted family member know right away

Final Thoughts: Stay Informed, Stay Connected, Stay Safe

AI scams in 2025 may be smart—but so are you. With the right knowledge and support, you can enjoy the benefits of today’s tech without falling victim to its dark side.

Take a moment to review your online habits, set up safeguards, and talk to your family. The more we share this knowledge, the safer everyone becomes.

Have you or someone you know experienced an AI scam?

Your story could help protect someone else. Speak up and stay connected.